This is the 5th in a series of articles from Mac Expert Lloyd Chambers examining the many considerations Mac users must make when choosing the best Mac for their needs.

In The Ultimate Mac Buyer’s Guide, Part 1, he outlined how best to choose a Mac for your needs. In Part 2, he discussed the huge value of new vs used Macs. In Part 3, he discussed high-end configurations. In Part 4, How Much Memory Does Your Workflow Require?, he outlined how to determine the optimal amount of memory for your workflow. Before you dig in here to Part 5, be sure to read that first, because CPU cores with too little memory degrades everything.

Here, I discuss the general principles governing how many CPU cores make sense for your tasks. A subsequent article will show how to actually check out your own specific workflow and applications for CPU core usage and scalability.

CPU core usage is more complex on Apple Silicon (M-Series) processors due to having both “performance” and “efficiency” cores. For our purposes here, I ignore that difference— in practice it makes little difference in the evaluation for workflow needs, and even less so on the M3 series chips, whose efficiency cores are faster than ever before.

What is a CPU core?

A CPU core executes computer instructions. It is what makes most things happen on your computer, under the covers.

On modern chipsets, a CPU core is one of many, with each core capable of executing instructions on its own, independently of the others. Originally, a “CPU” was synonymous with a physical CPU chip with one (1) CPU core on that chip. Then we got 2 CPU cores, then four, etc.

Today’s CPU chips contain 16 CPU cores on Apple’s M3 Max system on a chip (SOC) and 24 CPU cores on the M2 Ultra. A forthcoming M3 Ultra (two conjoined M3 Max chips) is expected to have 32 CPU cores. Some non-Apple CPU chipsets have as many as 96 CPU cores!

More CPU cores are usually better, but not always.

Here in 2024 most software now behaves very nicely given more CPU cores, but there can be cases where more CPU cores slow things down due to the overhead of coordinating them. That is a software implementation deficiency for particular software, however, not a general limitation.

CPU cores are general purpose. A future article will discuss GPU (graphics) cores, which are optimized for graphics and AI with highly specialized instructions.

By heading to /Applications/Utilities/System Information.app on your Mac, you can see the number of CPU cores on your machine:

What is Scalability?

Scalability indicates the efficiency with which more CPU cores can complete the task relative to fewer cores. Perfect scalability means that n CPU cores complete the task in 1/n the time of one CPU core.

For example, a task that would take 24 seconds with one CPU core could (ideally) finish in 1 second using 24 CPU cores. It is never that simple, but that’s the idea.

With well-designed software, more CPU cores work together in parallel on separate parts of the task, and thereby finish that task much faster. But in practice, this often does not work out, for several reasons:

In practice, scalability is never perfect, because there is always at least a little overhead. Extremely well-written software can achieve 99.9% scalability however, such as my own diglloydTools IntegrityChecker, provided that disk I/O is fast enough (so that the software is not starved for data to process).

Example: below, SHA-512 cryptographic hashing throughput is 99.9% scalable. In this case, there is no disk I/O, and memory bandwidth is ample on the Apple Silicon Macs. Note: axis is not linearized properly due to problems with Excel graphing, but the numbers prove-out the scalability.

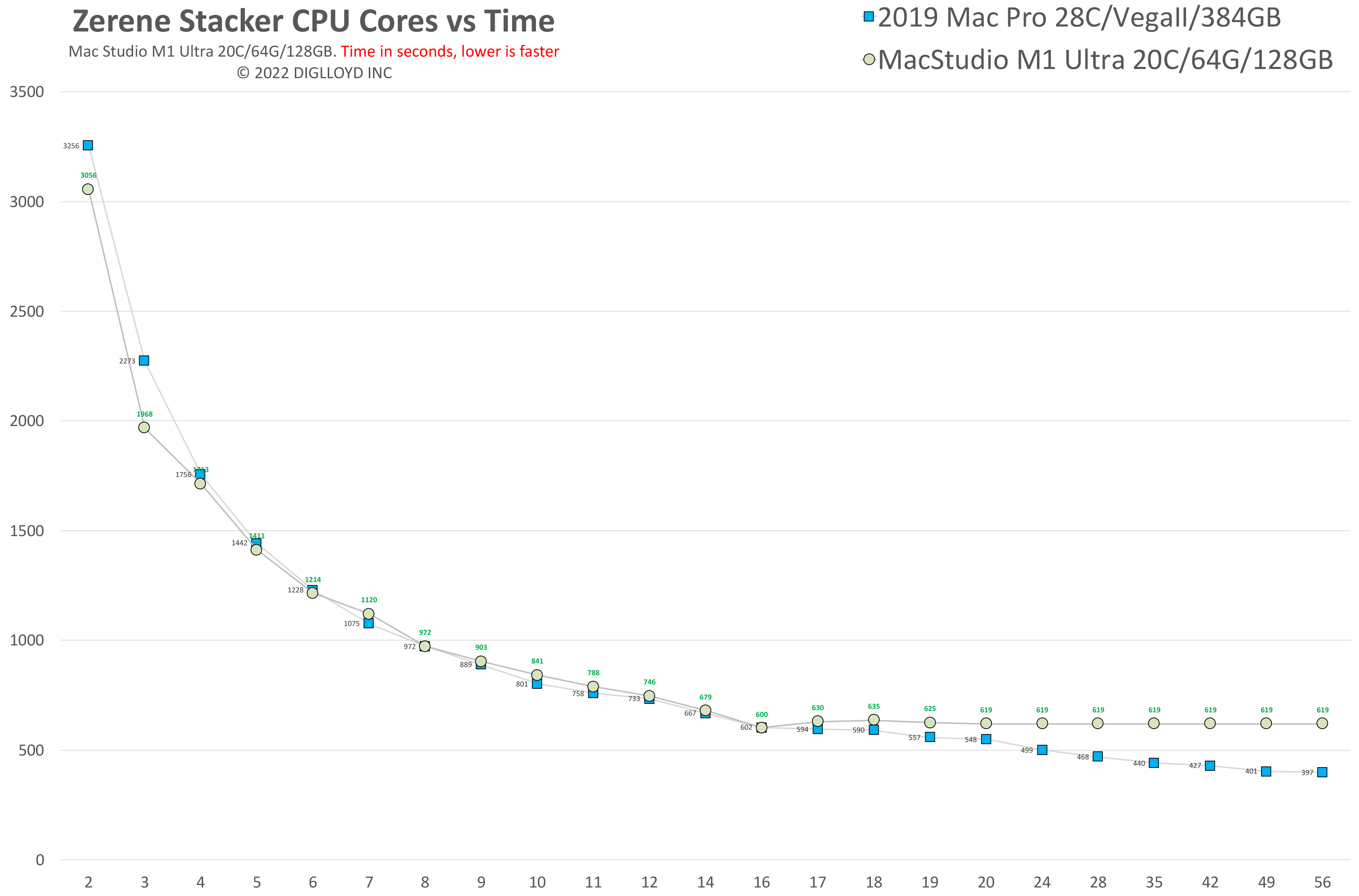

Example: In the below graph, the number of CPU cores has doubled, but the runtime has been reduced by only 17%. Zerene Stacker is highly efficient at using CPU cores, but focus stacking has inherent chokepoints which make full parallelism impossible.

Serialized vs Parallel Tasks and CPU Cores

Many factors can interact to reduce scalability.

The most troublesome situation is a task that cannot be broken up into pieces but must instead run strictly from start to finish, with each step dependent on the previous one: a serialized task—one step must follow the previous step in strict ordering. More CPU cores can do nothing to improve such tasks, since only one CPU core can work on it. You cannot bake a cake without first mixing the ingredients and heating the oven.

A task that can be broken up into separate pieces and can be computed simultaneously is called a parallelizable task. For example, adding a million numbers together can be done in any order and with any number of CPU cores, by splitting up the numbers into n batches for n CPU cores, then adding the sums of each batch together.

The same ideas apply to software tasks. Some tasks are totally serialized and cannot use more than one CPU core for any meaningful portion of the task. Some tasks are completely parallelizable, at least once everything is prepared into computing chunks/jobs.That preparation portion is mostly serialized followed by parallel computation.

For nearly all things you do on your computer, there is a varying mix of serializable and parallelizable tasks. That mix of tasks means that 12 CPU cores vs. 8 might help your work significantly, but 24 CPU cores helps you only 10% of the time and for only a short burst, with the net result being getting everything done 2% faster with twice as many CPU cores!

Below, Adobe Photoshop panorama assembly makes poor use of the 24 CPU cores. This task is inherently difficult to parallelize. It will run about as fast with 6 or 8 CPU cores as with 16 or 24.

Constraints on parallelizable tasks

Software tasks range from entirely serialized to fully parallelizable tasks, though the latter does not really exist since preparation for parallel computation is itself serialized to some degree.

Performance of a computer vs. CPU cores is the time required for the task in its entirety to complete. If a task could run twice as fast for 10% of its runtime, that’s only a 5% improvement in the total runtime—no meaningful value.

Doubling the number of CPU cores might reduce runtime by 49% (near-perfect scalability) or 20% (partial scalability) or 0% (no scalability, serialized), or even 20% slower due to software design that causes contention by adding bottlenecks and overhead versus using fewer CPU cores.

A single software application often performs many different tasks. That is, the application cannot be said to be “scalable” since it may have dozens of functionalities, each differing.

Some tasks may be highly scalable, and some may be serialized within the same application. For example, Adobe Photoshop has a high degree of parallelism for RAW file conversion (assuming a fast drive for reading/writing), but is serialized when executing Javascript or assembling panoramas.

Computing tasks show reduced scalability for many reasons:

- A computing task may be inherently serial so that only one CPU core can be used.

- Software written “single threaded” e.g. badly coded to use only one CPU core.

- Software fails to overlap disk I/O with computation (single threaded with respect to I/O).

- Inherent chokepoints that force CPUs to idle while waiting for sub-tasks.

- Artificial chokepoints (poor coding) that force CPUs to idle while waiting for sub-tasks.

- Contention of CPU cores for the same resources eg memory bandwidth and/or I/O.

- I/O bound computation; data needed for computation reads or writes too slowly from disk or network, even if software overlaps I/O with computation.

- Thermal throttling: the CPU gets too hot, so the operating system reduces clock speed, slowing everything down. I once saw my MacBook Pro drop to 0.8G Hz on a hot day, making it unusable.

- Reduced clock speed: as more CPU cores are used, the clock speed is reduced more and more. Effectively, each CPU becomes slower.

- Full-stop pauses that require user input.

Conclusions

This article discussed the reasons more CPU cores might or might not speed up your workflow. The next article will go into how to observe and assess CPU core utilization with specific examples.

If you need help working through your requirements, consult with Lloyd before you potentially buy too much or too little, and see my Mac wishlist of current models, first pondering whether refurbished or used Macs might do the job for you.

View all posts by Lloyd Chambers… Lloyd’s photo web site is diglloyd.com, computers is MacPerformanceGuide.com, cycling and health are found at WindInMyFace.com, software tools including disk testing and data integrity validation at diglloydTools.com. Patreon page.

Thank you for this excellent series.

Glad to hear it. Please always let me know if something is unclear.