Apple has heard for years—and rightfully so—that Siri is its worst product. As digital assistant tools like Amazon’s Alexa and the Google Assistant reached their zenith of popularity, Siri, by comparison, felt slow, dull, and isolated from many of the smart-home and other types of products that were integrating with its rivals.

And though the shine of Siri’s closest rivals has worn off, ChatGPT has since come on the scene, sending the likes of Google and Microsoft scrambling to inject new artificial intelligence features into their massive platforms.

Through all of this, Siri has remained, well, Siri. Always happy to start a timer or turn on a light, but very reticent to answer an actual question that didn’t have to do with the weather or long multiplication. But if Apple’s demonstration during its WWDC keynote on Morning is any indication, that’s changing soon. During that keynote, Apple unveiled all of the AI-related features its been working on to fend off its familiar rivals Microsoft and Google—and its new frenemy, OpenAI.

It’s not Just AI. It’s Apple Intelligence

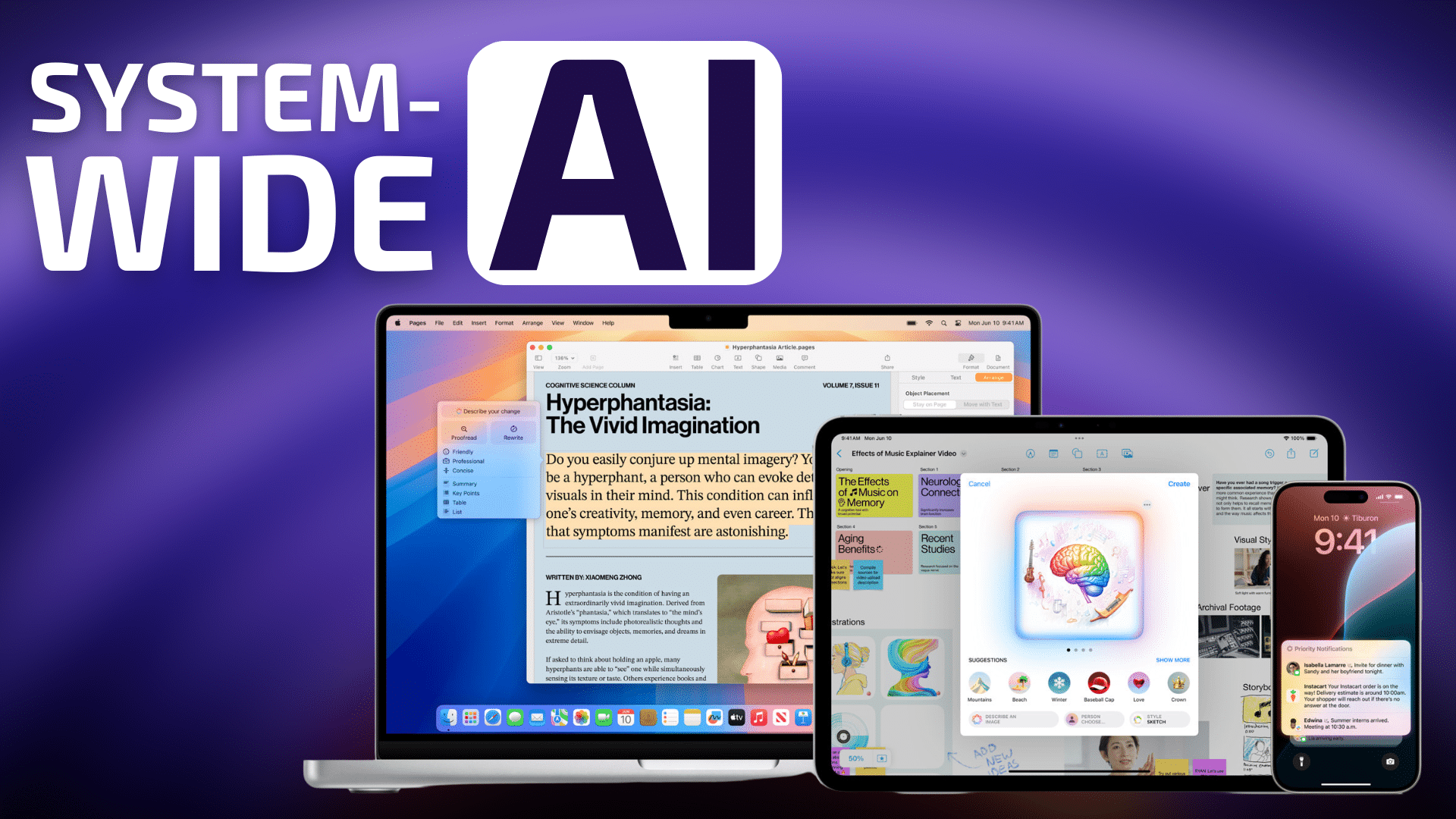

As Apple typically does with new concepts and product categories, the company has been working to put their own spin on it before putting it out into the world. For AI, that means adding privacy safeguards, avoiding controversial features like image and video generation, and making sure the features are deeply integrated into its iOS, iPadOS, and macOS platforms and products.

Of course, Apple wasn’t just going to call these new features “AI” features and move on with it. As it has done with displays, ARM processors, haptic feeedback, and countless other technologies, Apple has rebranded AI in its own likeness, calling of the new AI features, “Apple Intelligence.”

Unlike chatbots like ChatGPT, which have become the most popular form of AI interaction model, Apple Intelligence features seek to integrate into existing Apple device features and workflows. More often than not, these Apple Intelligence features don’t require you to do anything at all, instead offering themselves up to assist in speeding up everyday tasks on your device.

Let’s dive into all the new AI features Apple is readying for release in iOS 18, iPadOS 18, and macOS Sequoia.

Capabilities

Let’s start off by laying out the types of capabilities Apple Intelligence has. There are three primary ways Apple Intelligence will be prepared to assist and augment your use of an iPhone, iPad, or Mac: Language, Images, and Actions.

Architecture, Semantic Index, and Personal Context

Each of these types of capabilities is augmented by Apple’s inclusion of Personal Context In order to build a Semantic Model at every level of the Apple Intelligence model. In short, Apple Intelligence features have device-wide access to everything on your device that could provide context to the model that will help it deliver the results you expect.

This includes text messages, emails, and other data that give Apple Intelligence the ability to glean deeper insights into your requests, similar to how a friend or actual assistant would understand what you mean when you say, “I need some research on my Tuesday meeting with Bob.” A real assistant would likely know not only who “Bob” is, but why you’re meeting with Bob and how you should prepare based on your relationship to Bob.

If this sounds like a security nightmare, Apple says that all personal context that Apple Intelligence gleans from your device stays on your device and any assumptions and feedback given by the model in response to personal context computed on-device as opposed to in the cloud.

Because much of Apple Intelligence is computed on device, you won’t be able to use the features on just any iPhone, iPad, or Mac. In fact, the only iPhones that will be supported at launch are the iPhone 15 Pro models. The only Macs and iPads that will be supported are those with an M1 chip or better.

Personal Context is really the key to what will make Apple Intelligence stand-out from other tools like ChatGPT, which don’t have secured access to your personal details. To be clear, Apple will be pushing some requests to the cloud, but they’ll be doing so with custom-built servers that provide what Apple calls Private Cloud Compute.

These servers were built with Apple Silicon which provide a high degree of cryptographic encryption for any Apple Intelligence tasks that need to be passed off-device.

Language

The first type of capability Apple Intelligence provides is Language, which manifests throughout a given device through system-wide writing tools, the categorization of emails and notifications, and the ability to record voice notes and even phone calls and have those recordings transcribed instantly.

Images

The second major capability type is image generation, which is likely the most-used AI-fueled feature at the current moment. Apple calls its image generation tool Image Playground and will make it available in multiple apps like Messages, Notes, Keynote, Freeform, Pages, and third-party apps.

An important note to make about Apple’s implementation of image generation through Image Playground is that creating realistic-looking images is not an option. Instead, you’ll be able to create images in one of three styles: animation, sketch, and Illustration. This is likely Apple’s way of avoiding the controversy of suddenly billions of users being able to generate AI images that could trick others into thinking generated images were real.

Another form of image generation in Apple Intelligence is called Genmoji. This feature allows you to provide a descriptive prompt that is then used to create a custom emoji. Apple says you’ll be able to create “Genmoji of friends and family based on their photos. Just like emoji, Genmoji can be added inline to messages, or shared as a sticker or reaction in a Tapback.”

Actions mean a big Siri upgrade

The final capability type is actions, which builds on Apple Intelligence language capabilities and its understanding of on-device Personal Context. These new actions capabilities translate directly into a massive upgrade for Siri which will now be able to handle specific tasks using and even within specific apps on your device.

For instance, you’ll be able to tell Siri something like “Pull up a photo of Jen wearing a ballcap,” and Siri will surface all photos that match that description. Or you could ask Siri, “When and where is my meeting with John?” And when Siri replies you can even follow-up on that initial query with a request: “Give me directions,” and Siri will know that you mean directions to the meeting location you just spoke about.

Another cool, Apple Intelligence-fueled feature coming to Siri is on-demand technical support of Apple devices. You’ll soon be able to ask Siri how to do certain things or toggle settings on iPhone, iPad, and Mac an Siri will be able to answer you with step-by step instructions. “Users can learn everything from how to schedule an email in the Mail app, to how to switch from Light to Dark Mode,” Apple says.

And because Siri has much more capability now, Apple has redesigned the Siri interface. Now when you talk with Siri, instead of a floating orb, you’ll see a rainbow glow surround the edges of the display. And, for the first time, you’ll be able to make a request to Siri without talking. With a double tap of the home bar at the bottom of the screen, you can type a request to Siri.

ChatGPT integration

But Apple isn’t solely relying on its own model to provide users with a top-notch AI experience. It has also partnered with ChatGPT to provide system-wide integration of ChatGPT for free.

By default, Siri will try to handle any request you make. But you’ll also be able to ask Siri to use ChatGPT to complete the request and in some situations, Siri will suggest ChatGPT and ask your permission to send the request and any needed data there.

Apple Intelligence Features Coming to Mac, iPhone, and iPad

OK, with that very basic understanding of what Apple Intelligence is and is capable of, let’s take a look at how those capabilities translate into new features across iOS 18, iPadOS 18, and macOS Sequoia.

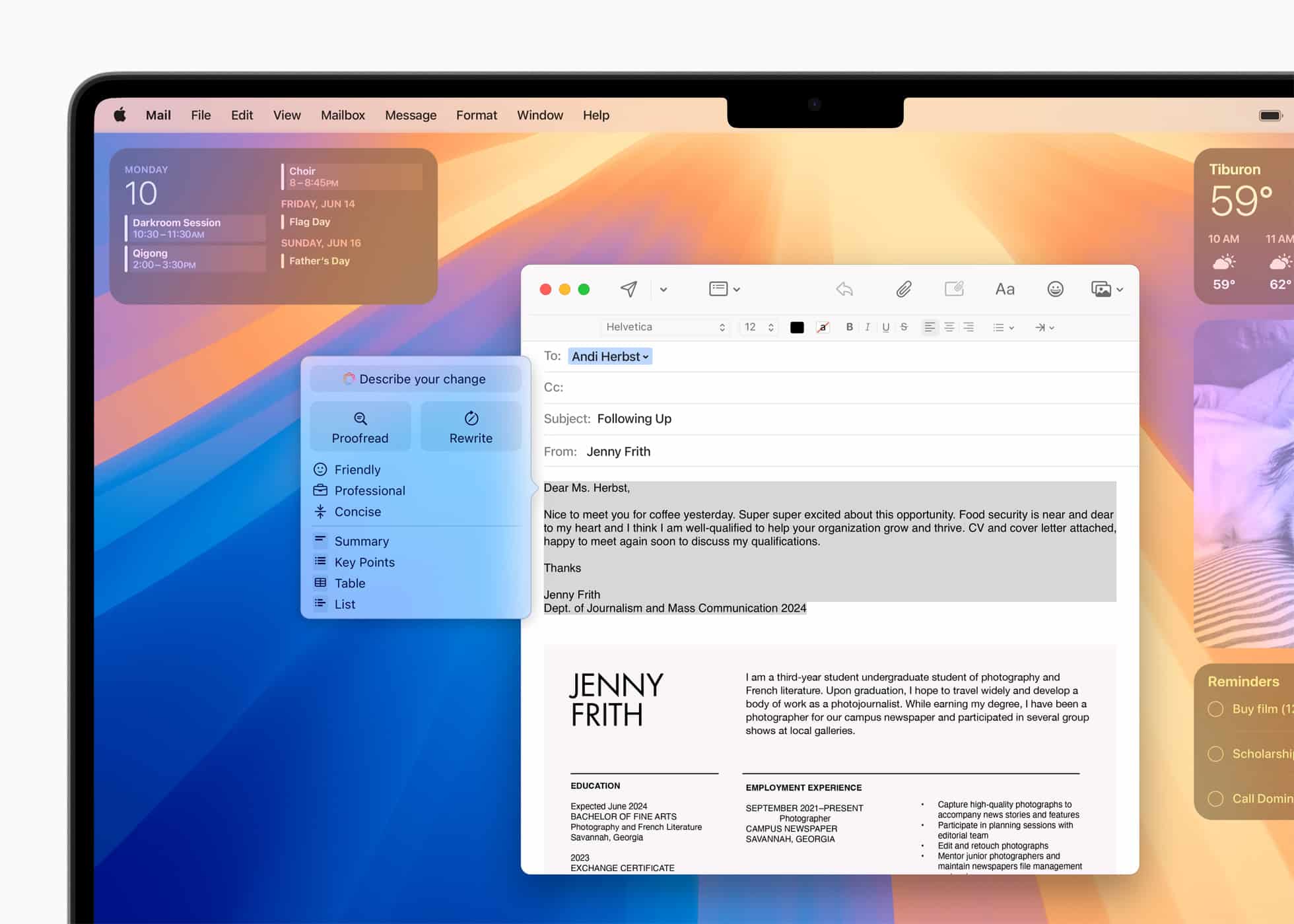

Writing Tools

As mentioned before, Apple Intelligence includes system-wide writing tools that will be built into macOS Sequoia. From apps like Mail, Notes, Pages—pretty much anywhere where there’s a text field to type into—you’ll have access to tools that can proofread, suggest grammar corrections, summarize and even suggest rewrites to your text.

Of all the writing tools available, Rewrite will likely be the most useful. Once you’ve taken a stab at what you’d like to communicate, you’ll be able to tell your Mac to offer up some suggested rewrites. In fact, you can even tell your Mac to change the tone of what’s written to better fit whom you’re addressing.

Image Playground

Image Playground, discussed above will be available to generate images in apps like Mail and Messages and will also be a standalone app. Again, you’ll be able to create emojis, sketches, animations, and illustrations with this simple to use tool.

Image Playground will allow you to create a specific prompt or choose from a range of concepts from categories like themes, costumes, accessories, and places. You’ll even be able to choose a non-realistic generated image of someone from your personal photo library to include in the larger generated image.

In Notes and Freeform, you’ll have the option of afessing Image Playground through the new Image Wand in the Apple Pencil tool palette.

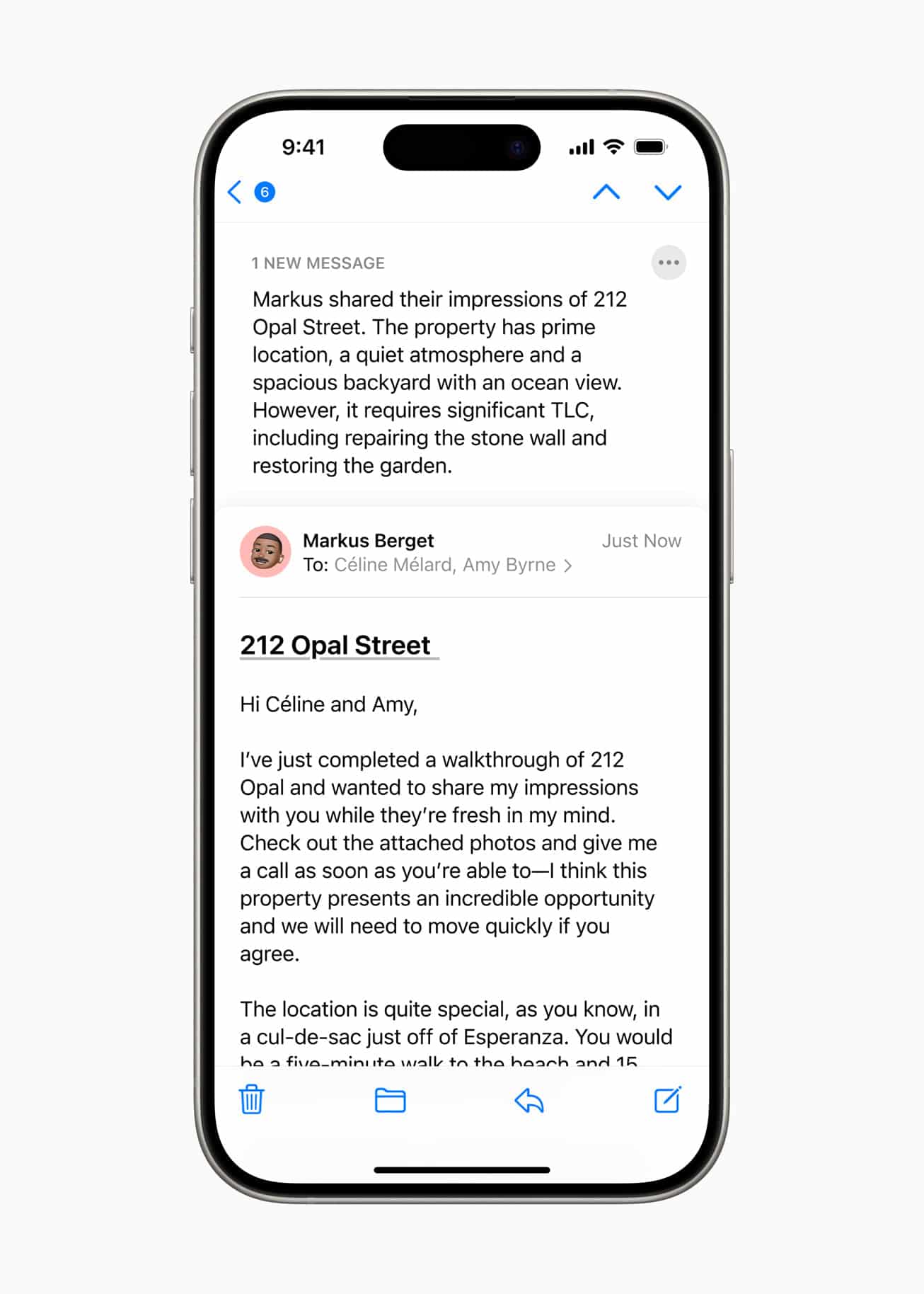

Integrating both of the above features, the Mail app across iPhone, iPad, and Mac is getting some major new capabilities.

The new Mail apps will be able to analyze your inbox and surface a Priority Messages section at the top of your inbox which shows your most urgent emails.

Plus, instead of showing the first few lines of a message as a preview, Mail will instead show a quick summary of that email, giving you at-a-glance understanding of a message without even having to open it.

Another new feature in Mail is called Digest.

Mail will group together particular chains of emails from businesses around a purchase or appointment, providing a summary of all those emails and extracting the most important details such as date, time, pickup or venue location, and more, keeping you from having to go through the many emails that provide those details.

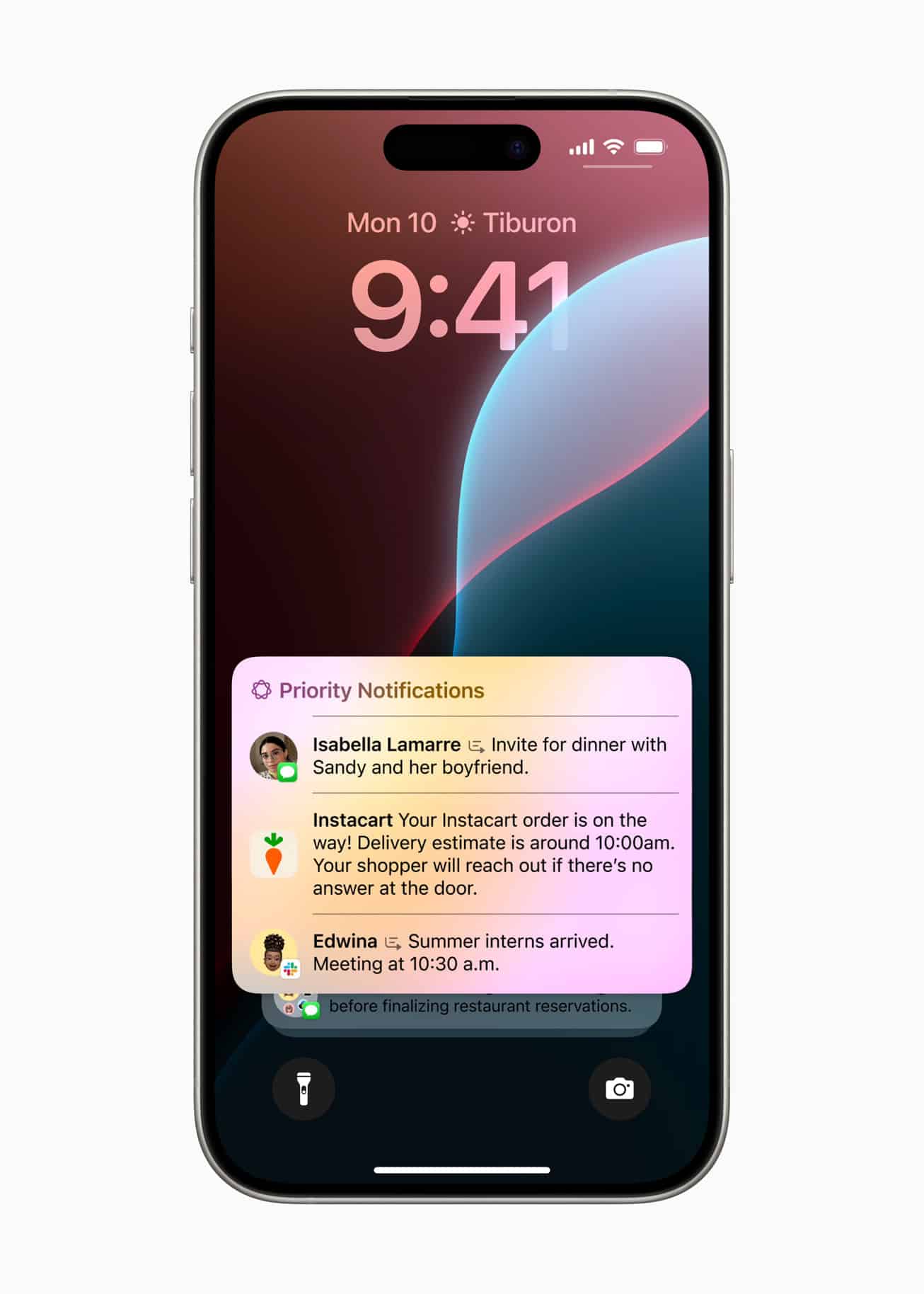

Priority Notifications

Similar to how Mail will attempt to surface only the most important emails, Apple Intelligence will also take in all of your notifications, and display only what’s most important. You’ll also be able to get summaries of long or stacked notifications to show key details and updates from that thread of notifications.

For instance, let’s say a family group chat has absolutely blown up your phone in the last hour while you were handling an errand. You’ll be able to see a summary of that group chat and all pertinent details.

There’s also a new Focus mode called Reduce Interruptions, which seeks to provide an experience close to Do Not Distrub but is smart enough to know when to let certain important notifications through, such as an emergency closing at your child’s daycare.

Audio Transcription in Notes and Phone

In the Notes and Phone apps, you’ll now be able to record audio or a phone call and have that audio transcribed and summarized. When a recording is initiated while on a call, participants are automatically notified, and once the call ends, Apple Intelligence generates a summary to help recall key points.

Coming later this year

So, what do you think of Apple Intelligence? Are you excited to try these new features and capabilities? Optimistic that Siri will be useful for the very first time? Let us know in the comments.

Apple has released betas of iOS 18, iPadOS 18, and macOS Sequoia to developers and the public beta of these releases will be available next month. At this time, the Apple Intelligence features are not present in the betas.

Without any Apple Silicon devices or a iPhone15 to check this out, it seems like non-issue for me. I’ll wait and see the inevitable tempest as adoption grows among other users. Thanks for the info!

Congratulations! You will be assimilated. You have no choice.