By Lloyd Chambers, Guest Blogger

By Lloyd Chambers, Guest Blogger

An overlooked aspect of data management is data integrity: are the files intact tomorrow, a year from now, on the original drive and backup drives, or perhaps even on a DVD or Blu-ray? Or after having been transferred across a network?

Knowing that files/data are intact with no damage is a key part of any system restoration/update/backup/archiving. In some situations it could be mandatory (record keeping). The more valuable the data, the more important it is to consider the risks to loss, which include loss by file corruption as well file deletion – not to mention viruses and software bugs and user errors.

“Data” can mean image files (JPEG, TIF, PSD/PSB, etc), video clips or projects, Lightroom catalogs, etc. Or it could mean spreadsheets, word processing files, accounting data, and so on. Knowing that these files are 100 percent intact leads to a comfort level in making system changes in storage approaches.

How can data be damaged? Disk errors, software bugs in applications or drivers or the system itself can happen. Moreover, the “damage” could be user-induced: saving over or replacing/deleting a file inadvertently. Simply having a “warning flag” could be useful in noting that “no expected changes” is violated.

For example, suppose that a new computer system is acquired and various drives need to be transferred over. Or that you have upgraded to a newer and larger hard drive. Or swapped SSDs. Or there is a need to restore from a backup. Or that you burned files to a DVD or Blu-ray – are they intact with no changes? Even RAID 5 with its parity data does not validate files when reading them, and a validate pass is over the entire volume with no selectivity for the desired files.

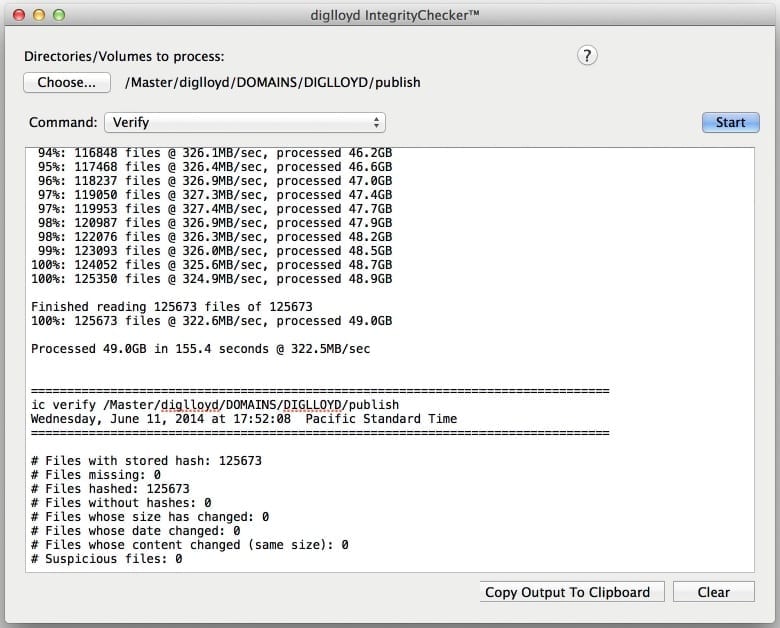

Enter IntegrityChecker, part of diglloydTools. At any time, files of any and all types can be checked against a previously computed “hash”, a cryptographic number unique to the file. If there is a mismatch, the file has been altered, somehow. This check can be made at any time: on the original, or on a 1,000th-generation copy of that file. The only requirement is that the hash be computed once and remain in the same folder as the file for later reference.

How it works with IntegrityChecker

IntegrityChecker computes a SHA1 cryptographic hash for each file in a folder, storing those hash numbers in a hidden “.ic” file within that folder. Thus, all files in the folder have a “hash value” against which its current state can be checked.

The process can be run on folders, or an entire volume.

- Run on the original files (computes and writes the hash values for every file in each folder into a hidden “.ic” file in that folder).

- Make the copy or backup or burn the DVD/Blu-ray or whatever (this naturally carries along the hidden “.ic” file in each folder).

- At any later time (tomorrow or a year later), run on any backup or copy (this recomputes the hashes and compares to the values in the “.ic” file).

For example, some pro photographers burn DVD or Blu-ray discs containing folders on which IntegrityChecker has been run; these discs carry along the “.ic” file in each folder, and thus can be verified at any time. There are numerous such uses.

Usage

Both command line (Terminal) and GUI versions are provided. The GUI is basic, but the internals are what counts: one of the most efficient multi-threaded programs of any kind you’ll ever find. IntegrityChecker runs as fast as the drive and CPUs can go. Available commands include “status”, “update”, “verify”, “update-all” and “clean”.

Worth doing or happy go lucky?

For many computer users, the consequences are of little importance if a few things go bad: a song, a picture, a particular document; no big deal. But even such users would be upset losing years of photos – bugs in software (gray swan?) can have widespread impact; data integrity checking is a sanity check on assumptions.

But in a financial and obligatory professional duty sense, professionals need to consider the end-to-end processes they use. When data is one’s livelihood, attention to data integrity takes on new importance.

The greater the value of the data and the greater the time span over which the data has value, the more important it is to implement processes that minimize the chances of loss, because over years the storage format is likely to change with transitions and copying, etc. Also, knowing that a backup restored from a crash is valid takes some of the sting out of a crash.