This is the 6th in a series of articles from Mac Expert Lloyd Chambers examining the many considerations Mac users must make when choosing the best Mac for their needs.

The Ultimate Mac Buyer’s Guide, Part 1, outlines how best to choose a Mac for your needs. Part 2, assesses the huge value of new vs. used Macs. Part 3, digs into high-end configuration considerations. Part 4, How Much Memory Does Your Workflow Require?, shows how to find the optimal amount of memory for your workflow. Part 5 discusses how CPU cores are used (or not). It is best to read the prior parts first, and in order.

Here, Lloyd discusses how GPU cores apply to workflow.

About GPU cores

GPU core usage in a Mac is more sporadic compared to CPU cores. For some tasks, GPU cores are irrelevant or of no meaningful import. For others, it’s all about GPU cores, with the CPU cores doing little more than coordinating data management for the GPU computations.

A GPU core (“Graphics Processing Unit”) executes computer instructions just like a CPU core (Central Processing Unit”). However, GPU cores have more specialized instructions targeted to more restricted (less general purpose) computation, nominally graphics operation, but now AI and all sorts of things.

Today’s GPU systems on Apple Silicon (M1/M2/M3) can have as many as 80 GPU cores as found on Apple’s M2 Ultra system. An expected M3 Ultra (dual M3 Max) could have as many as 96 GPU cores.

Generally speaking, GPU cores are oriented to parallel operations—all cores doing the same task, each on its own piece of data until some job is done (in parallel). GPUs are ill-suited to simultaneous unrelated tasks. They do not play well with multiple programs simultaneously, or even different tasks in the same application.

Even a single application like Photoshop will NOT allow you to work interactively while some jobs are being run on the GPU (e.g. Enhance Details)—you are blocked from proceeding until the GPU is free. Doing so would likely cause an application crash. And in general, GPU support has long been a science fair project with all sorts of reliability problems.

But here in 2024, as APIs have evolved, things are pretty stable on Apple Silicon—much more so than on Intel Macs.

A fun bit of history: CPU, then GPU, then lots of ’em

When I took computer graphics at Stanford way back in ~1984, there were no GPU cores. We had a single CPU (one CPU core) that was very slow and had a few kilobytes of memory. We wrote code to draw lines and triangles using that single CPU core. There were no “threads”, no multithreading, just one flow of control. We had BSD Unix and/or the yucky VMS operating systems.

Those were the days of single-CPU systems with the “big ones” on Unix or VMS “time sharing” systems that we now take for granted even on our personal computer—an operating system that can handle numerous (thousands) of processes or users all at once. There was a dorm where a guy with a 5MB hard drive (yes 5 megabytes) was super popular for writing papers instead of using a typewriter (luckily he had a minimal need for sleeping).

It was a very exciting period but no picnic: to this day, I have a hard-coded habit of hitting control-S frequently (to save my work) because when 50 students on “dumb” CRT terminals were all trying to finish the assignment on Sunday night, the time-sharing mainframe would crash every 10 minutes. The mainframe hardware then was akin a slide rule compared to today’s iPhone, in terms of processing power.

Pretty quickly, and with the popularity of personal computers, it became clear that the main CPU was overburdened. Using a general purpose CPU to draw lines and triangles and circles and refresh the screen sucked up so many CPU cycles that everything else suffered. Indeed, I once had a computer (Sinclair ZX81 ~1982) with a FAST and SLOW button on its chiclet-style keyboard. FAST meant the screen would go blank! You’d periodically press it again to see if the program was done:

“Like the ZX80 before it, the CPU in the ZX81 generated the video signal. In FAST mode, the processor stopped generating the video signal when performing other computing tasks, resulting in the TV picture disappearing. Each key press resulted in a blank screen.”

This situation was untenable; hence the genesis of a complementary chip, the GPU, whose job it was to draw stuff and to wrangle bits for the screen via “bitmaps” and “bitmapped memory”. From there it got more and more sophisticated and is now billions of times faster and with very powerful instruction sets. A GPU today can do a lot more than basic drawing tasks.

For a while, there was also “the GPU”, meaning a single core just like the original (one) CPU. On modern chipsets, we still say “GPU” but that is taken to meant the collection of numerous GPU cores along with associated memory and supporting circuitry.

GPU cores require parallelism to prosper

GPU systems are built to have all the cores used at once, that is, in parallel. Or at least most of them. Since there are usually many more GPU cores than CPU cores, if the task can be “chunked,” there can be a huge improvement in having, say, 64, or 80, or 96 GPU cores all crunch the numbers all at once simultaneously—parallel computation.

Typically these computations are structured by the CPU, which also coordinates obtaining input data and saving results in some way. For this reason, all user level tasks use both CPU and GPU cycles. A GPU-intensive task might show only one or two CPU cores in use, with most of the work being done by the GPU cores. Those CPU cores are managing the process but not doing much computation of their own.

In general, CPU cores are much better suited to parallel computation. For example, suppose there are 24 camera RAW files needing processing with Adobe DNG Converter. If there are 24 CPU cores, each CPU core can independently handle one of those files, so that 24 files are processed in parallel by 24 CPU cores. But GPU cores typically cannot be used that way.

GPU cores are much more parallelism oriented as a group. It’s not a situation where one GPU cores does one thing, another core another thing, etc. Simplifying a bit, the GPU cores are designed to do one thing (the same thing) on all the GPU cores at once. E.g. a single file is loaded with stuff organized just-so, then the complex stuff of Enhance Details is performed on all the pixels of the image file in parallel operations. Or do a matrix or vector math operation, or cryptographic hashing, or anything where large set of data/numbers all need the same operation done in parallel.

Many tasks use a mix of CPU and GPU cores, causing fits and stutters in efficiency of using either/both. Often this means intense but brief bursts of GPU activity that speed up the task as a whole only modestly. High efficiency would be things involving the same computations on large datasets, like hours of video processing, AI training models, or image scaling as in Topaz Gigapixel AI, or Enhance Details on large numbers of files. Or mining Bitcoin.

Checking GPU usage

The built-in reporting on GPU core usage in macOS Activity Monitor is relatively primitive, reflecting the difficulty of characterizing what is being done. It is much harder to understand GPU usage vs. potential, as compared to CPU core usage.

GPU History window of Activity Monitor shows usage history, but I have seen cases where GPU usage is shown as negligible, when in fact the GPU cores are cranking way. This confusing situation stems from what kind of GPU operations are being done: some are apparently not accounted-for in the GPU history.

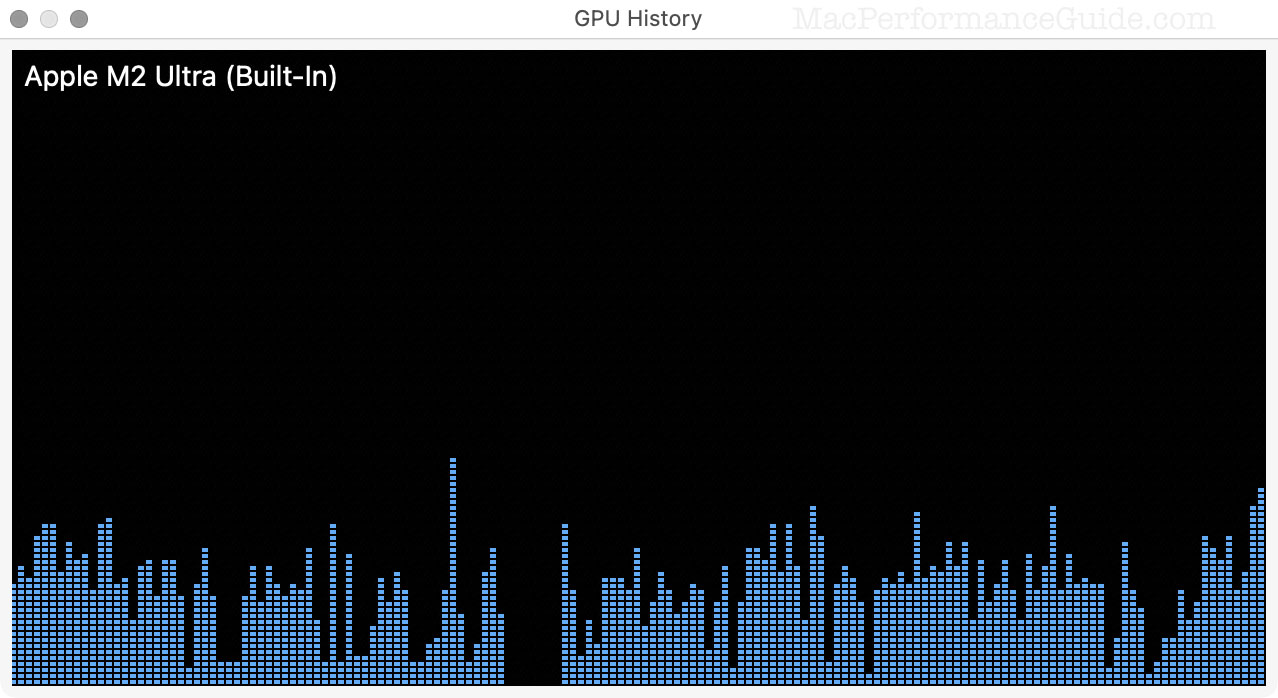

Use /Applications/Utilities/System Information.app to see GPU usage history via Window => GPU History.

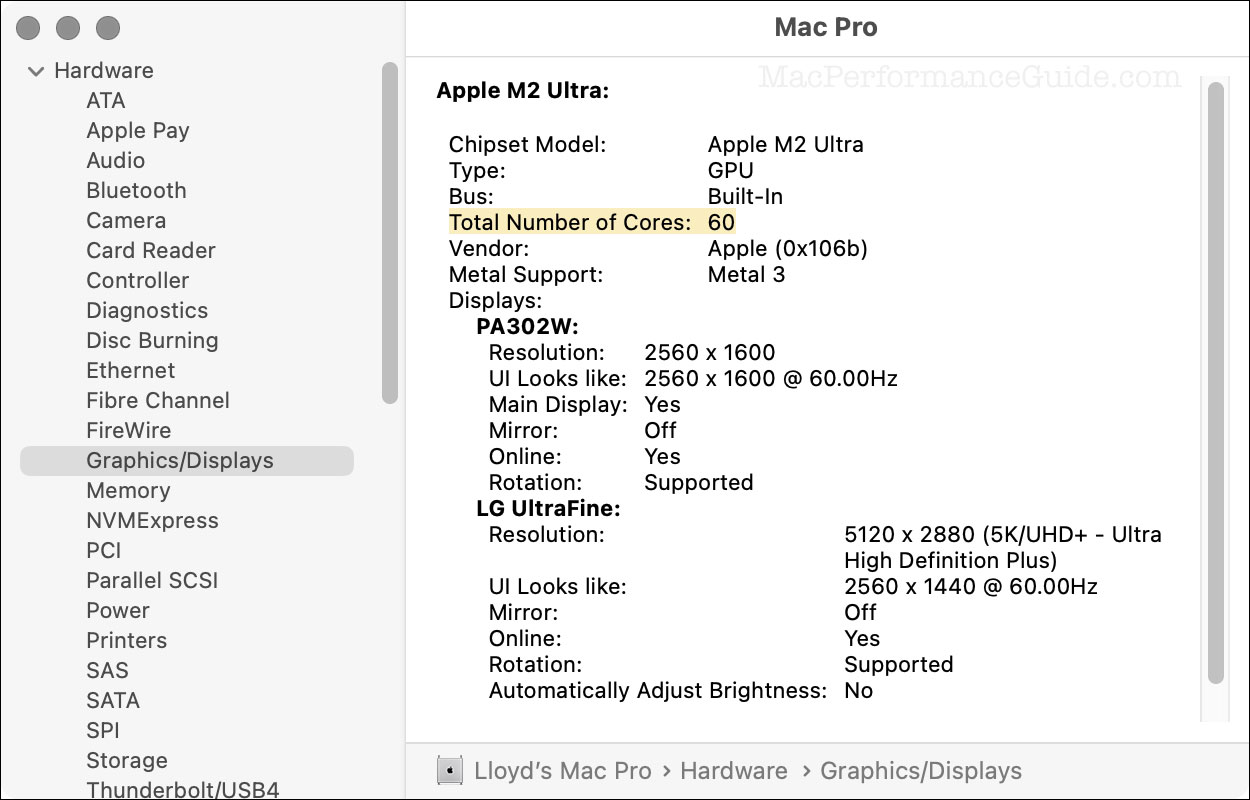

Example: a relatively idle Mac is continuously using the GPU, if only for screen refresh and screen drawing. This alone makes assessing GPU usage not at all clear-cut. Below, a Mac Pro M2 Ultra that is largely idle except for me typing as I write this article. And yet there is quite a lot of GPU usage shown.

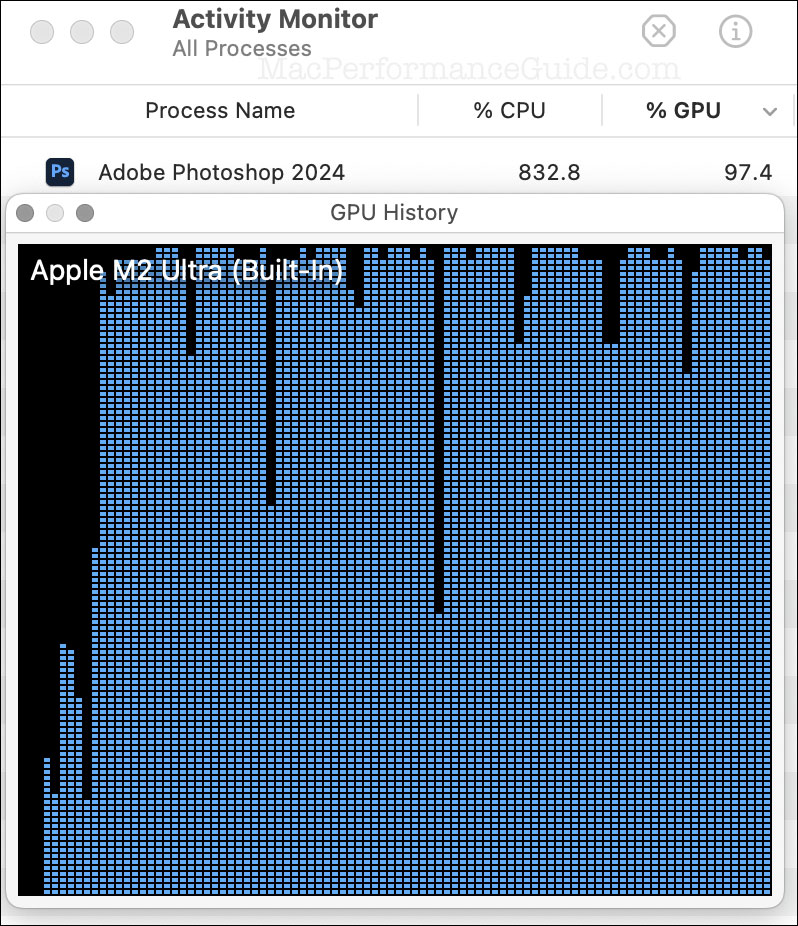

Example: below, Photoshop is running Enhance Details + AI Denoise on 100 megapixel Fujifilm GFX100S RAW files. GPU utilization is close to 100%.

Do I need a Mac with more GPU cores?

If you see nearly 100% GPU utilization for a task in your workflow (as above), then more GPU cores are likely to reduce the runtime proportionally.

For example, I bought my 2023 Mac Pro M2 Ultra with the 60-core GPU. I did not spend another $1000 for the 76-core GPU, deeming it too costly relative to the performance improvement. The speed improvement would apply ONLY for tasks where the GPU is fully utilized. And if the task is short-lived then it has no real impact on the workflow efficiency.

Example — more GPU cores

See the discussion of Constraints on parallelizable tasks in the Part 5: CPU Cores.

I do a lot of work in Adobe Photoshop. The most demanding operations I perform are AI Denoise + Enhance Details.

How much faster would that run if my Mac Pro had 76 GPU cores instead of 60 GPU cores? I see a runtime of ~18 seconds per 100MP raw file. The maximum potential time savings would be 4 seconds per file taking about 22% less time, according to the ratio of the number of cores (60 vs 76):

18 seconds / (76 cores / 60 cores) = ~14 seconds per file, or 22% less time.

That’s the best case. The actual savings would typically be less, since overhead (reading and writing files) would not change, and so such overhead would become a larger proportion of total runtime. But in this case, I’d expect something pretty close to that, perhaps an 18% improvement (not 22%).

Would that be worth $1000 more over the 3-5 year lifespan of the machine? How much of my time would I actually save in my actual workflow? My decision to go with 60 GPU cores was solid IMO, and $1000 can go to something else useful. But for someone crunching 8 hours of video 5 days a week, cutting 8 hours down to 6.2 hours could pay for itself quickly.

Conclusions

GPU cores can powerfully accelerate your workflow, but usually only for a few very specific tasks.

When you buy a new Mac, take stock of how much and for how long the GPU cores are used. If the extra money makes sense for the expected reduction in runtime, then bump up the GPU cores to the max. Otherwise, put that money into more CPU cores and/or more memory and/or a larger SSD.

If you need help working through your requirements, consult with Lloyd before you potentially buy too much or too little, and see my Mac wishlist of current models, first pondering whether refurbished or used Macs might do the job for you.

View all posts by Lloyd Chambers… Lloyd’s photo web site is diglloyd.com, computers is MacPerformanceGuide.com, cycling and health are found at WindInMyFace.com, software tools including disk testing and data integrity validation at diglloydTools.com. Patreon page.

Believe it or not, BROWSERS can use A LOT of GPU cycles, while the CPU stills at moderate or even LOW cycles and utilization. This can happen if you have A LOT of open tabs.

SO, GPU usage is NOT limited to GRAPHIC intensive applications.

I have been able to max out my GPU on an 2019 i9 iMac with 96GB RAM and a Radeon Pro 580X graphics card just with lots of browser tabs open, to the point that my cursor would become so jerky and unusable until I closed some tabs.

I believe it. There are computations that a GPU can do that a web browser might call upon.

And if a system library is coded to run some tasks on the GPU, any application could eat up cycles.

I have a more detailed response, but this WordPress system keeps giving me errors so I cannot include it here.

I believe it. There are computations that a GPU can do that a web browser might call upon.

And if a system library is coded to run some tasks on the GPU, any application could eat up cycles.

For example, cryptographic hashing is not a graphics operation at all, and yet that is the #1 computation in the world today, eg Bitcoin hashing used GPUs for a long time, now it is mostly ASICs, at least as I understand it.

In this case, what is going on is more about badly coded web pages eating up computing power. We’ve all seen this with a CPU pegged at 100 percent for a web page. No reason it can’t happen with GPU cycles, but a runaway or wasteful web page is not a good reason to buy a faster GPU.